Exploring OpenInterpreter’s fusion of AI with actionable tasks, transforming digital workflows, and enhancing productivity through innovation.

Introduction

Given the exciting advancements in Large Language Models (LLMs) that offer capabilities akin to interacting with a human-like entity—enabling users to ask questions, write code, solve complex problems, and more—a groundbreaking application for LLMs has emerged, designed to address and expand upon these capabilities. OpenInterpreter stands at the forefront of this innovation, enhancing how users can leverage AI for an even broader range of tasks, by allowing LLMs to run code on your computer to complete tasks.

With that said, this article will delve into the evolution of LLMs, the limitations they’ve faced in task execution, and how OpenInterpreter is set to redefine the landscape.

Intro To OpenInterpreter

Traditional LLM applications, such as basic chatbots or text generators, are primarily focused on providing information, answering questions, or generating content within a text-based interface. While incredibly powerful for these purposes, they do not typically interact with the external environment or execute tasks beyond generating text.

Open Interpreter, however, bridges the gap between AI-generated insights and actionable execution. This integration of conversational AI with direct action capabilities marks a significant evolution in how we interact with technology, making AI a more tangible and interactive assistant in our daily computer-based tasks.

Think of it from an Interactive vs. Static point of view. Traditional LLMs provide responses based on the input they receive(static), without the ability to affect or interact with the user’s environment. Open Interpreter, on the other hand, acts upon its environment, making it a dynamic tool for executing tasks(interactive).

Core Features and Capabilities

This innovative tool extends the functionality of traditional LLM applications, offering a unique set of features and capabilities that significantly enhance productivity, creativity, and the scope of tasks that can be automated or executed. Here’s a closer look at its core features, and capabilities.

- Code Execution: Open Interpreter allows users to run code snippets directly from the conversation interface. This feature supports various programming languages, enabling developers and technologists to test scripts, automate tasks, or even debug code in real-time without leaving the chat interface. And in case you are worried that the interpreter would cause harm to your PC by executing code, then do not worry as the interpreter asks you first before executing the code.

- Task Automation: Beyond code execution, Open Interpreter can automate a wide range of tasks on your computer. This includes file management operations like renaming files in bulk, automating data entry, generating reports, and even complex workflows that involve multiple steps and applications.

- Browser Control for Research: One of the most distinctive features of Open Interpreter is its ability to control a browser. This enables users to conduct research, scrape web data, and automate browsing tasks directly through the chat interface, offering a seamless integration between conversational AI and web navigation.

- Interactive and Executable Capabilities: Unlike traditional LLM applications that primarily focus on generating text-based responses, Open Interpreter is designed to interact with the operating system and other applications. This means you can ask it to perform actions like opening files, editing documents, and even controlling other software through custom scripts.

How Does OpenInterpreter Actually Work

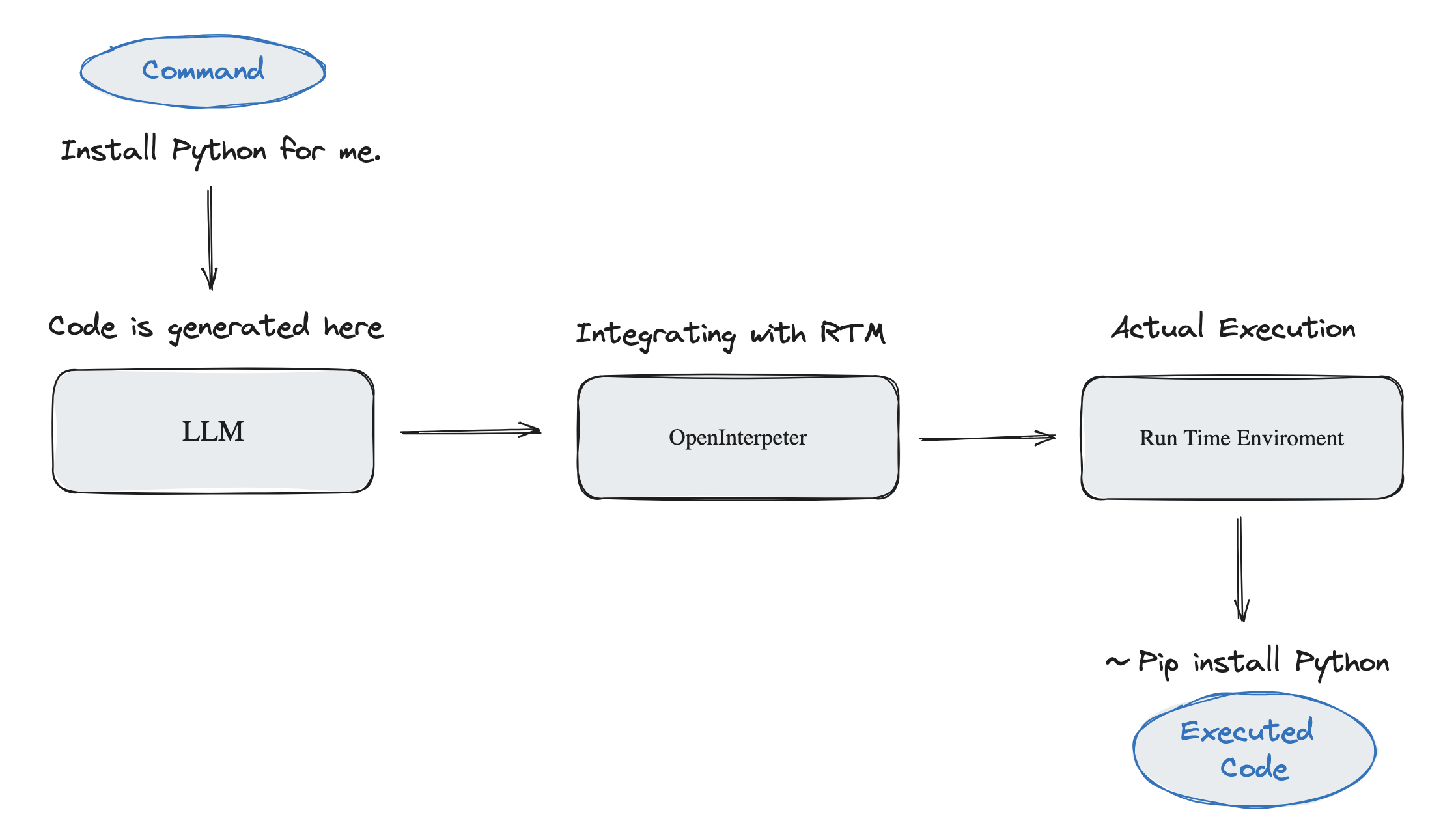

At a deeper level, OpenInterpreter depends on Large language models, particularly those based on transformer architectures, in order to understand the intent and context of the user’s natural language inputs.

These models have been trained on diverse datasets that include code snippets, command sequences, and general language understanding, enabling the system to accurately map conversational inputs to technical commands or code.

So how exactly does OpenInterpreter Execute actual commands on your device? The answer is simple, as the OpenInterpreter tool integrates with the host system’s programming environments and interpreters, such as Python’s runtime environment.

This integration allows OpenInterpreter to execute code snippets within a sandboxed environment, providing a layer of security and isolation from the main operating system. The sandbox environment limits the execution rights, preventing potentially harmful code from affecting the user’s system directly.

Setting Up OpenInterpreter On your PC

Note: the following steps are completed in the CMD/terminal.

This guide will walk you through installing OpenInterpreter on your personal computer, allowing you to leverage its capabilities for automating tasks, running code, and more. Follow these steps carefully to ensure a successful setup.

Step 1: Install Python 3.10 or higher

First of all, to make sure the tool can be installed correctly, the open-interpreter package requires the system to have a python 3.10 or higher.

How to Check Your Python Version? Access your command line interface (CLI), which can be Command Prompt on Windows, Terminal on macOS, or the equivalent on Linux distributions. Type the following command and press Enter:

| python –version |

Step 2: Install the OpenInterpter Tool

After ensuring Python 3.10+ is installed, use the pip package manager to install OpenInterpreter. In your CLI, enter:

| pip install open-interpreter |

The above command instructs pip to fetch the OpenInterpreter package from the Python Package Index (PyPI) and install it on your machine.

In this step, should you encounter a prompt to update pip, comply with the recommendation by running:

| pip install –upgrade pip |

Upgrading pip ensures compatibility and security, as well as access to the latest features and fixes.

Should you encounter any compatibility issues, try bypassing the Python version requirement (not generally recommended for production environments) with:

| pip install open-interpreter –ignore-requires-python |

However, this workaround should be used cautiously, as it overrides the package’s specified Python version requirements, potentially leading to unexpected behavior and the open interpreter tool may still not work.

Step 3: Run the OpenInterpter Tool

To awaken OpenInterpreter on your device, simply type the following into your CLI:

| interpreter |

This command launches OpenInterpreter, ready to accept your instructions and perform tasks.

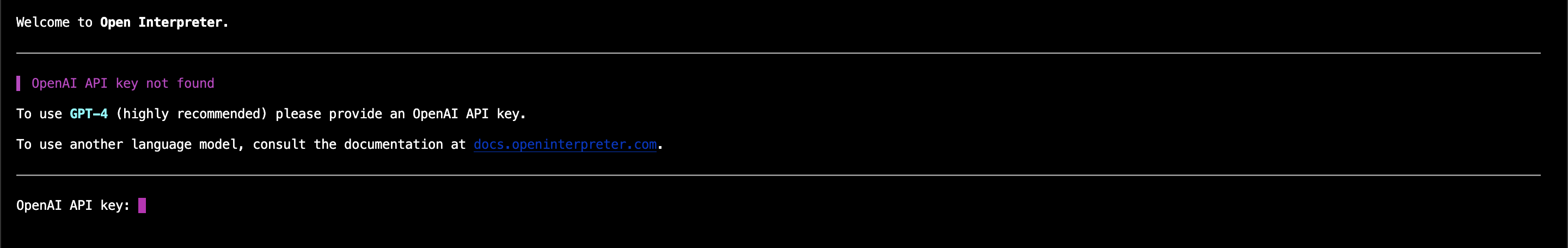

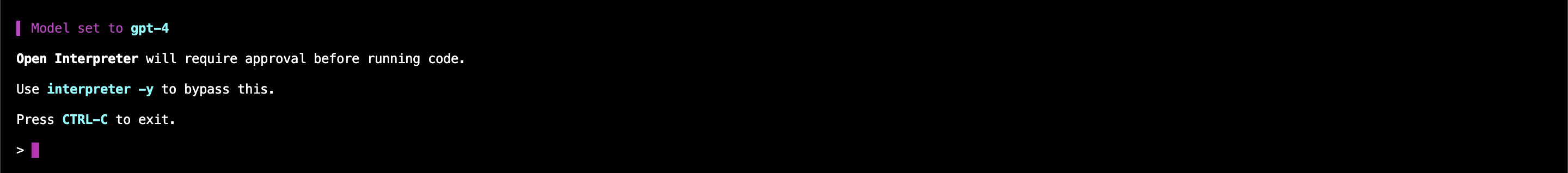

Upon running, you’ll be prompted to select from available configurations, such as opting for ChatGPT 4 (note: this might require an OpenAI API key) or other default options provided by the tool.

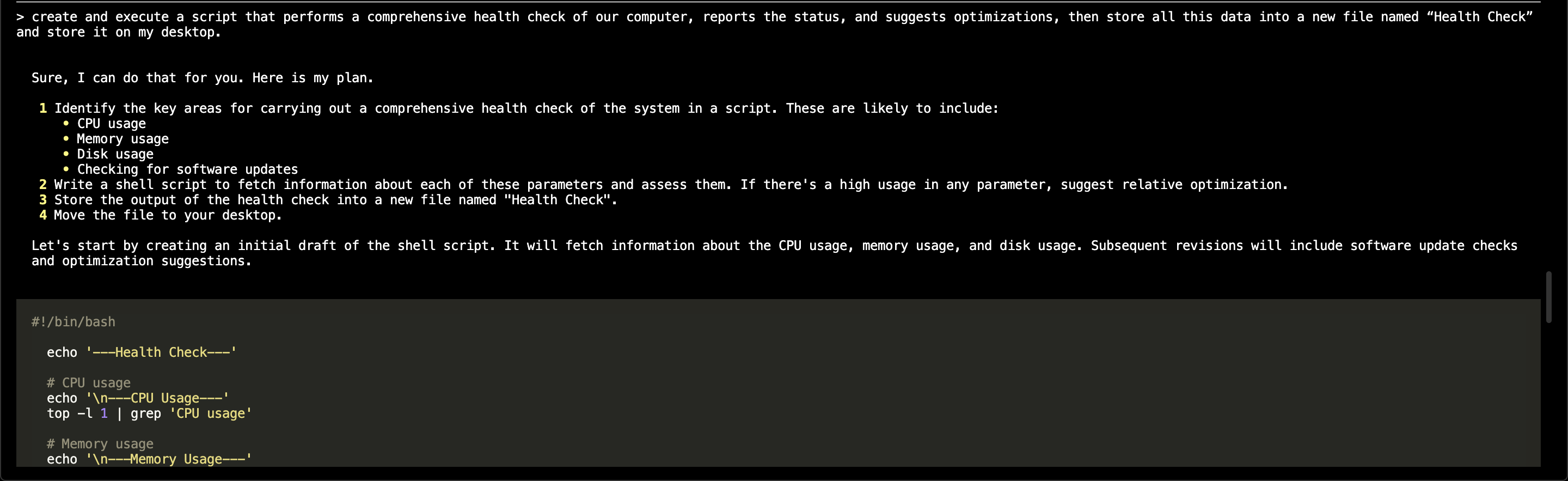

A straightforward way to validate the successful installation of OpenInterpreter is to instruct it to perform a simple task. For this example, we will ask open interpreter to create and execute a script that performs a comprehensive health check of our computer, reports the status, and suggests optimizations, then store all this data into a new file named “Health Check” and store it on my desktop.

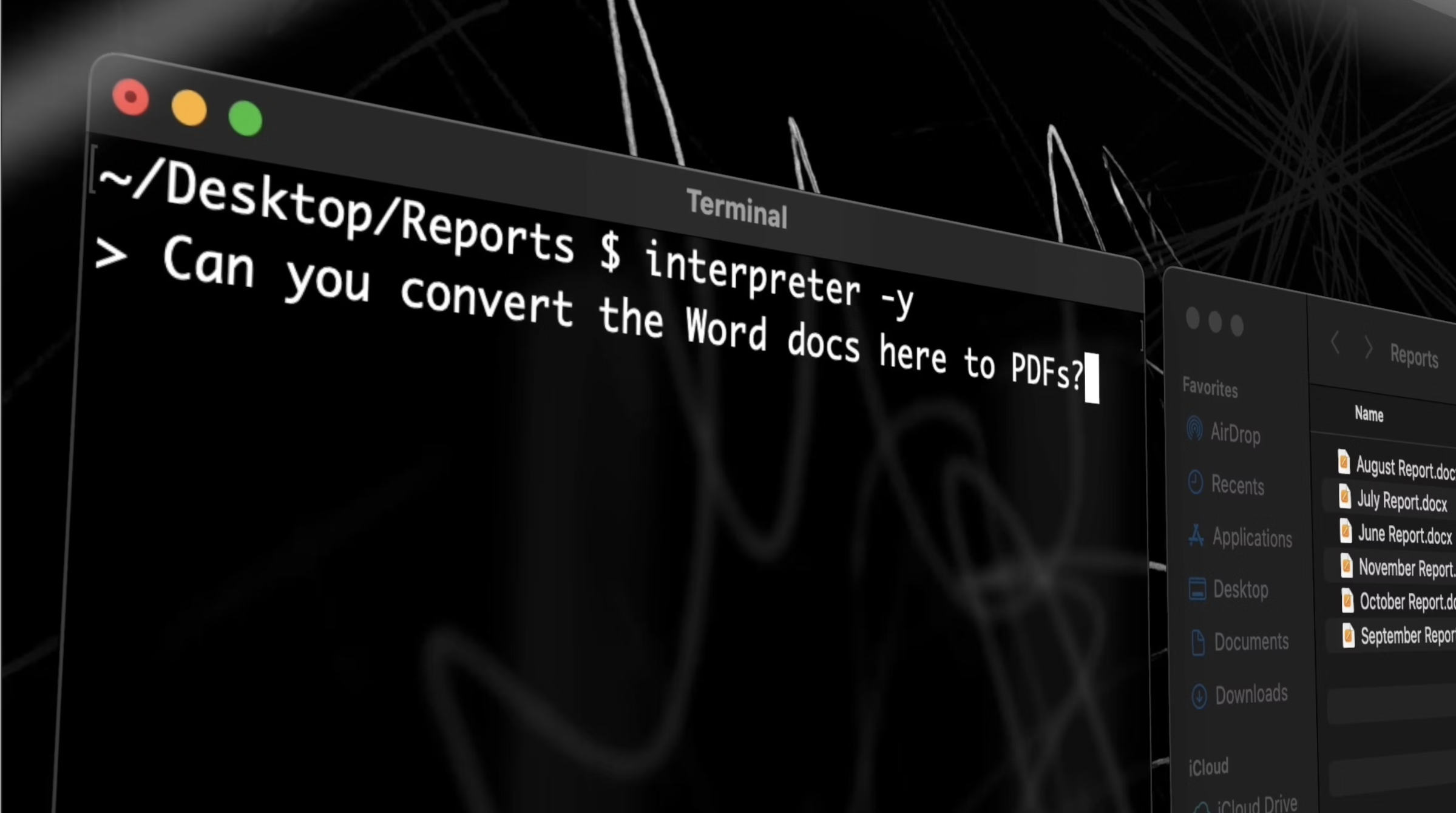

Before each execution, the open interpreter tool would ask if you want to execute the following script/code or not. In case you want the model to execute code with no permission use the interpreter -y command.

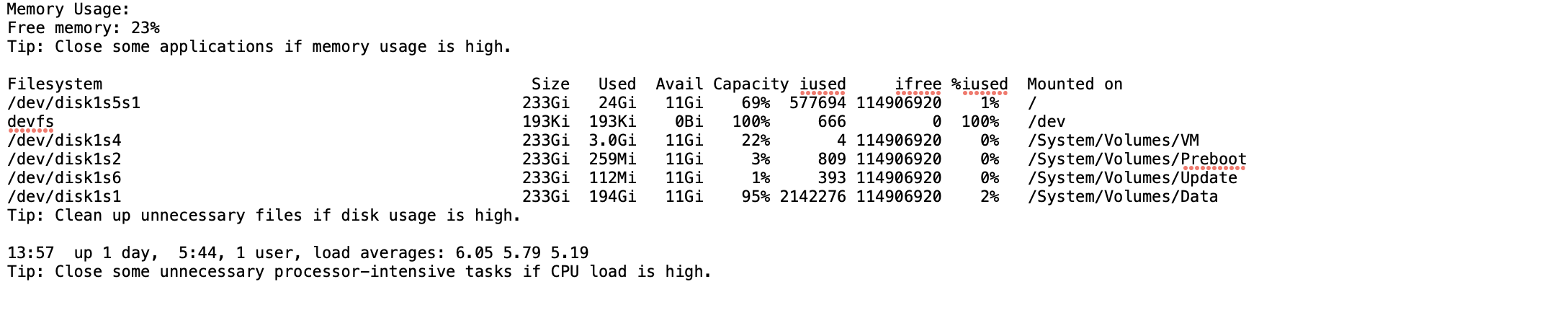

After executing the command, navigate to your desktop. There you will find a newly created “Health Check” file that contains the health measures with a tip on how to reduce each resource usage.

In the above case, using gpt 4, the openInterpreter tool has started by writing a script that checks for the memory used on my device along with the storage and other statutes. Then, the script saves the data along with a single tip on each resource into a new file on the desktop.

You can even perform more complex tasks, as such a tool allows users to go wild with their imagination, instructing the open interpreter to write and execute any necessary scripts that the user requires.

To learn more about the Open Interpreter tool, please check the tool’s GitHub documentation.

At this stage, OpenInterpreter empowers you to tackle a wide array of daily tasks, provided the terminal has the necessary permissions. However, it’s important to recognize that tools enabling code execution are relatively nascent and have considerable room for enhancement. Therefore, it’s advisable to personally evaluate their performance, discerning their strengths and current limitations in executing various tasks.

Key Notes

First and most importantly, leverage the Code Execution feature safely. While the ability to execute code directly is powerful, use this feature judiciously. Always review the code for potential security issues before running it, especially if sourced from external locations. Note that some of the changes performed may not be reversible causing users some unwanted issues. The open interpreter itself asks you before performing or executing any line of code first, thus check it carefully.

Familiarize yourself with OpenInterpreter’s diverse capabilities through hands-on experimentation. The more you explore, the more you’ll uncover its potential to streamline your workflow.

Lastly, keep an eye out for new updates and features. OpenInterpreter, like any software, is continually evolving. Regular updates can introduce new functionalities or improvements to enhance your experience.

Conclusion

OpenInterpreter redefines a new way to interact with AI, it bridges the gap between conversational AI’s theoretical potential and practical execution on computers. This tool not only enhances productivity and creativity through task automation and browser control but also democratizes access to advanced automation for a broader audience.

As we look to the future, OpenInterpreter’s integration into daily workflows signifies a new era in technology, transforming AI from a passive assistant to an active participant in digital tasks, setting a new benchmark for AI applications.